The McKenzie Method of Mechanical Diagnosis and Therapy (MDT) is used worldwide to classify and manage musculoskeletal (MSK) problems. The assessment includes a detailed patient history and a specific physical examination. Research has investigated the reliability of the MDT spinal classification system (Derangement syndrome, Dysfunction syndrome, Postural syndrome, and OTHER), however no study has assessed the reliability of the 10 classifications grouped together as OTHER.

ObjectiveTo investigate the inter-rater reliability of MDT trained clinicians when utilising the full breadth of the MDT system for patients with spinal pain.

MethodsSix experienced MDT clinicians each submitted potentially eligible MDT assessment forms of 30 consecutive patients. A MSK physician and a faculty of the McKenzie Institute checked the 180 forms for eligibility and completeness, where a provisional MDT classification was blinded. Apart from their own assessment forms, the six MDT clinicians each classified 150 forms. Each patient could be classified into 1 of 13 diagnostic classifications (Derangement syndrome, Dysfunction syndrome, Postural syndrome, and 10 classifications grouped as OTHER). Reliability was determined using Fleiss’ Kappa (k).

ResultsThe reliability among six MDT clinicians classifying 150 patient assessment forms was almost perfect (Fleiss’ κ = 0.82 [95% CI 0.80, 0.85]).

ConclusionsAmong experienced MDT clinicians, the reliability in classifying patient assessment forms of patients with spinal pain is almost perfect when the full breadth of the MDT system is used. Future research should investigate the reliability of the full breadth of the MDT system among clinicians with lower levels of training.

Mechanical Diagnosis and Therapy (MDT), also called the “McKenzie Method” has been widely used by physical therapists and other health care practitioners as an approach for patients with musculoskeletal disorders.1,2 The MDT assessment includes a detailed patient history and a specific physical examination, within a biopsychosocial context, recognising potential drivers of pain and disability.2-6 The physical examination includes postural observation, movement loss, neurological testing, the establishing and the retesting of baselines, and the use of repeated movements, sustained positions, and other testing (e.g., sacroiliac joint pain provocation tests). The symptomatic and mechanical responses to different loading strategies guides the clinician towards a provisional MDT classification and classification-based treatment. In consecutive sessions, initial provisional classifications can be confirmed, rejected, or modified.7,8 Apart from specific exercises and postures, MDT aims to promote patient-specific education and self-management, embedded in a biopsychosocial context.3,4,9

Originally, the MDT system consisted of three main MDT syndromes to classify patients with musculoskeletal (MSK) conditions, that is Derangement, Dysfunction, and Postural. Prevalence data over the last two decades have shown that the Derangement syndrome has a high prevalence in the spine while Dysfunction and Postural syndromes are relatively rare.10 People not exhibiting the characteristics of one of these three syndromes were classified as ‘OTHER’. Currently, OTHER contains 10 specific classifications.10 Examples include Spinal Stenosis, Mechanically Inconclusive, and Trauma. The 13 classifications (Derangement, Dysfunction, and Postural syndrome and the 10 classifications grouped as OTHER) with their own operational definitions are described in Supplementary material 1 and 2.

In MDT, each classification is matched with a specific intervention and therefore reliability is key for the selection of appropriate management, which ultimately determines the treatment outcome. If there is unacceptable reliability, management following the classification may be inappropriate, as it may be based on an incorrect classification. Several studies have been performed on the reliability of the three McKenzie syndromes and the OTHER category as a whole grouping.11-16

Kilpikoski et al. found substantial reliability when patients with low back pain were classified into the McKenzie main syndromes (k = 0.6) and agreement was 95% between two trained MDT therapists.13 Clare et al. showed substantial to almost perfect reliability for syndrome classification (k = 0.84) with 96% agreement for the total patient pool, (k = 1.0) with 100% agreement for lumbar patients, and (k = 0.63) 92% agreement for cervical patients between two trained therapist.11 Razmjou et al. indicated good inter-examiner reliability between two therapists who were trained in MDT (k = 0.7).14 Dionne et al. found moderate reliability for diagnosis [κ=0.55, P < 0.001, confidence intervals (CI) 0.52, 0.58], for raters with different levels of MDT training in 20 videotaped cervical patients.17 Yet, to our knowledge, no studies have investigated the inter-rater reliability of clinicians using the 10 classifications with specific diagnostic criteria grouped under OTHER. One of the difficulties has been the low prevalence rate of each of those 10 other diagnostic classifications in primary care. For example, a prevalence survey by May and Rosedale based on 750 patients from 54 therapists from 15 different countries who worked in a variety of healthcare settings showed only 23% of the patients being classified as one of the 10 classifications grouped as OTHER.8

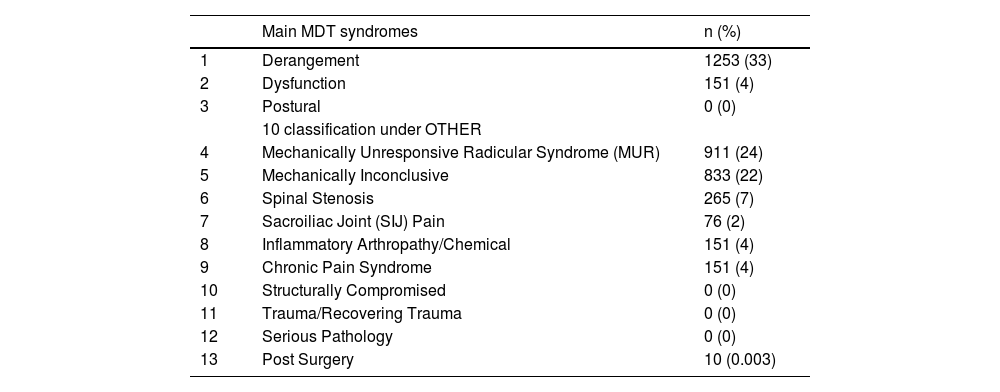

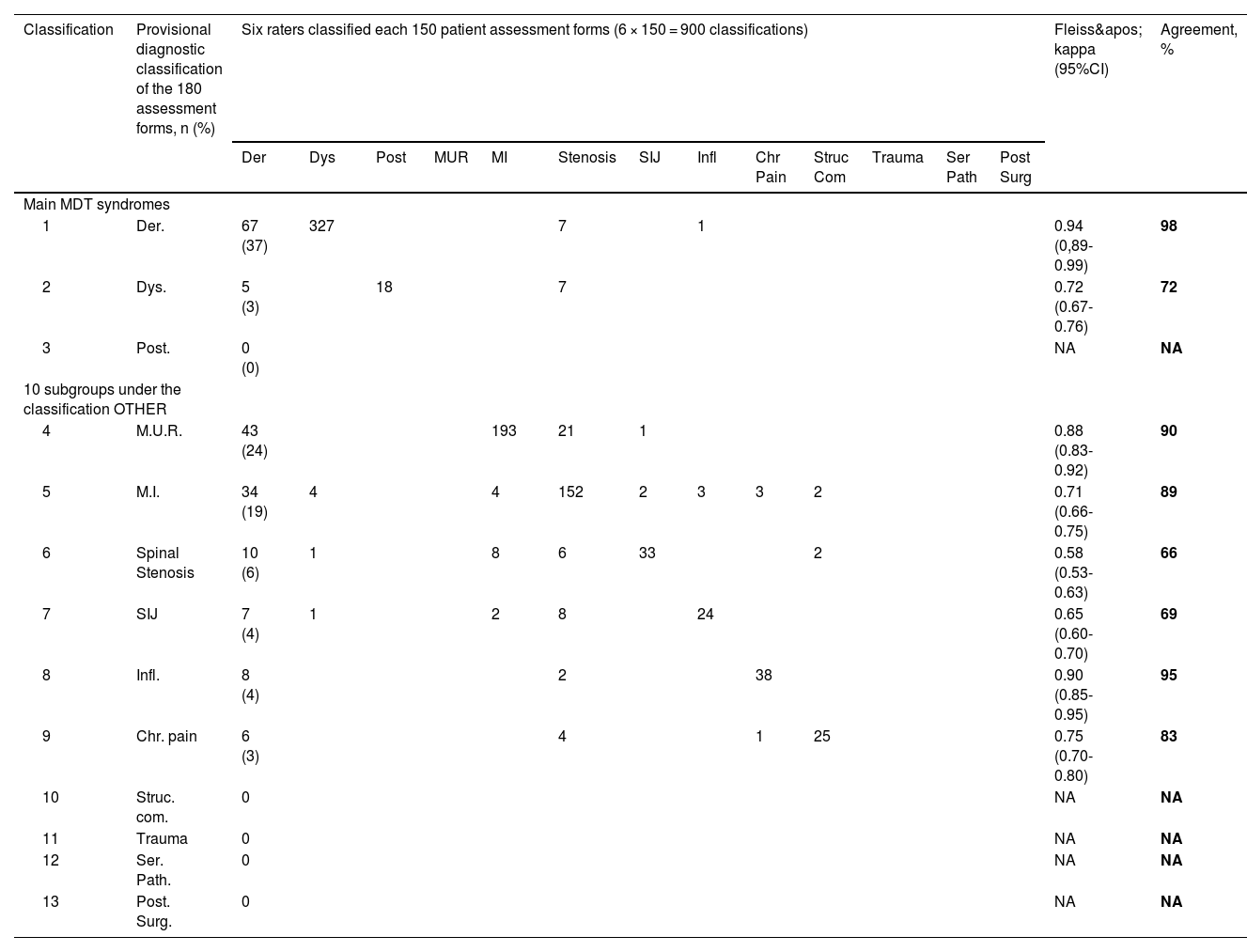

In Rugpoli, a Dutch medical centre that provides secondary and tertiary level care, patients are frequently classified as one of the OTHER classifications. Analysis of data for 3798 patients from Rugpoli during 2019, from eight physical therapists, showed that 63% of the patients were classified as one of the OTHER classifications. Table 1 shows the prevalence rates of the three main MDT syndromes and the 10 OTHER classifications at the Rugpoli facility. Due to the high prevalence rates of the classifications within the OTHER group in comparison with primary care, the Rugpoli facility is an ideal setting for conducting a reliability study focusing on these subgroups. Thus, the purpose of this study was to examine the reliability between MDT clinicians of the full breadth of the MDT syndrome classification for patients with spinal pain using MDT assessment forms.

Prevalence of the three main Mechanical Diagnosis and Therapy (MDT) syndromes and the 10 classifications under OTHER in Rugpoli in 2019 (n = 3798).

Assessment forms of real patients were used in this study to examine the reliability of MDT classification. Patient assessment forms are often used to examine reliability.18 Correctly designed, patient assessment forms are practical to use, avoid ethical problems, are inexpensive, and can collect data from different sources.18-22 Ethical approval for the study was obtained from the Health Science Research Ethics Board at the VU University of Amsterdam, the Netherlands, in March 2020.

Study designA two-phase vignette-based reliability study about consecutive patient files of real patients.

A two-phase study was conducted. For the first phase, five diplomate MDT therapists and one credentialed MDT therapist from different Rugpoli centres were requested to each generate 30 patient assessment forms of consecutive patients with spinal pain from Rugpoli intakes in 2019. This resulted in a total of 180 patient assessment forms. All patient assessment forms were electronically sent to the first author HH and FV, two authors of this paper, who checked the assessment forms for completeness, MDT classification characteristics, ambiguity regarding clinical presentation, a provisional MDT classification, and ethical board rules (such as the absence of personal data and age indicated only in decades, etc.). Rugpoli uses an assessment form similar to the MDT assessment form but written in a different format that aligns with the clinic's information technology system. Conclusions and any documentation of provisional MDT classification or management were removed from the patient files. In case of uncertainties or discrepancies, clinicians were consulted to discuss and confirm the accuracy of the case. 29 of 180 patient assessment forms were discussed by HH and FV with the senders, primarily because neurological examination findings were not recorded in the classification of Mechanically Unresponsive Radicular Syndrome. This non-recording was due to the setting of the clinics where a neurologist is the first examiner of a patient with radiculopathies. These neurology notes could be seen by the MDT therapist and creator of the assessment form but were not always transcribed to the assessment form. Each assessment form was randomly assigned a number from 1 to 180 to enable tracking of responses and data collection.

In the second phase, these six MDT clinicians rated all the clinical assessments forms except the 30 they had personally created. Thus, each rater examined a total of 150 assessment forms and each form was rated by five of the six raters. They were instructed to review each patient assessment forms based on its history and clinical presentation and to assign an MDT classification syndrome (Derangement, Dysfunction, or Postural) or one of the 10 OTHER classifications, including Serious Pathology, Chronic Pain Syndrome, Mechanical Inconclusive, Mechanically Unresponsive Radiculopathy (MUR), Inflammatory Arthropathy (including active Modic signs), Sacroiliac Joint Pain, Spinal Stenosis, Trauma, Post Surgery, and Structurally Compromised. All raters were blinded to the provisional MDT classification originally assigned to the patient assessment forms by its creator in phase one and to the classification of other raters. Raters were not aware of the figures in Table 1. Informed consent was obtained from each clinician.

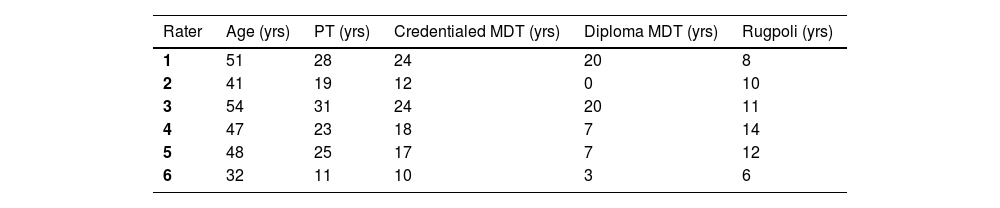

Characteristics of the researchers and the ratersThe study was performed by two researchers and six raters. One of the two researchers (HH) is a Senior Faculty of the McKenzie Institute and has 32 years of clinical experience as an MDT Diploma physical therapist working with patients with musculoskeletal spinal pain. The second researcher (FV) is a musculoskeletal physician with 30 years of clinical experience. The characteristics of the 6 raters are displayed in Table 2.

Characteristics of the MDT therapists that rated the assessments forms.

| Rater | Age (yrs) | PT (yrs) | Credentialed MDT (yrs) | Diploma MDT (yrs) | Rugpoli (yrs) |

|---|---|---|---|---|---|

| 1 | 51 | 28 | 24 | 20 | 8 |

| 2 | 41 | 19 | 12 | 0 | 10 |

| 3 | 54 | 31 | 24 | 20 | 11 |

| 4 | 47 | 23 | 18 | 7 | 14 |

| 5 | 48 | 25 | 17 | 7 | 12 |

| 6 | 32 | 11 | 10 | 3 | 6 |

yrs = years.

note: Rater two had basic training in MDT (Credentialed) and further in-company training by two faculty of the McKenzie Institute working in Rugpoli, but no formal diploma MDT training.

For the sample size calculation with multiple outcomes and raters, the method described by Rotondi and Donner was used.23,24 Based on the levels of reliability in earlier MDT studies, kappa was set at 0.8 (0.7 lower limit, 0.9 upper limit).11,13,14 With an alpha of 0.05 for six raters (phase two) and using the prevalence rates of the three MDT syndromes and the OTHER categories shown in Table 1 as outlined by May and Rosedale, 150 assessment forms were required.8 The sample size was calculated using the R Project for Statistical Computing (Vienna, Austria).

AnalysisTo determine interrater reliability between the six raters, Fleiss’ κ and the 95% confidence interval (CI) were calculated with the provisional MDT diagnosis as the basis. Also, Fleiss’ κ values, 95% CIs and agreement values were calculated for each diagnostic classification.25-27 Besides, the κ statistic and percentage agreement were analysed and reported between each pair of raters.28 Overall κ for three or more raters may result in a better representation of reliability, however it may mask extreme cases of agreement or disagreement among paired raters.29 Reliability and agreement measures provide different types of information. For the present study reliability is most appropriate, because it provides information about the ability of the MDT classification system to distinguish between cases.30 For interpretations of the κ values the guidelines of Landis and Koch were used: 0.01 to 0.20 slight, 0.21 to 0.40 fair, 0.41 to 0.60 moderate, 0.61 to 0.80 substantial, 0.81 to 1.00 almost perfect agreement.31 The data were analyzed using IBM SPSS Statistics Version 29.0.

ResultsThe 180 patient assessment forms consisted of 157 lumbar cases (109 with symptoms in leg(s) (below buttocks)), 20 cervical cases (17 with symptoms in arms (below shoulder), and 3 thoracic cases (all with symptoms referring into rib regions), patients were 18 years or older). All six raters rated 150 assessment forms each and there were no missing data. Fleiss’ κ was run to determine inter-rater reliability between raters and was found to be 0.82 (95% CI 0.80, 0.85), indicating almost perfect reliability.

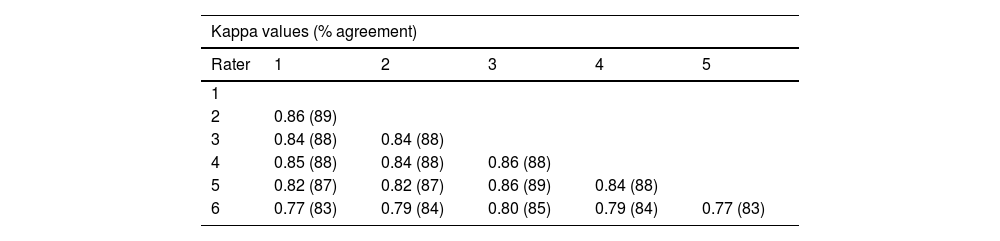

Paired comparison of agreement and reliability in patient assessment forms among the six raters. Agreement values ranged from 83% to 89% and κ values from 0.77 to 0.86 (Table 3).

The Derangement syndrome classification had the highest level of interrater reliability (Fleiss’ κ = 0.94, 95% CI 0.89, 0.99), while the Spinal Stenosis classification had the lowest level of reliability (Fleiss’ κ = 0.58, CI 0.53, 0.63). Agreement values varied between 98% (Derangement syndrome) and 66% (Spinal Stenosis) (Table 4). The Fleiss’ k values indicate that the level of reliability for individual classifications varies between almost perfect (Derangement syndrome) and moderate (Spinal Stenosis).

Prevalence of the provisional diagnostic Mechanical Diagnosis and Therapy (MDT) classification from the 180 patient assessment forms, the classifications by the six raters and Fleiss’ kappa values for each classification.

Der, Derangement syndrome; Dys, Dysfunction syndrome ; Post, Posture syndrome; MUR, Mechanically Unresponsive Radicular syndrome; MI, Mechanically Inconclusive; SIJ, sacroiliac joint pain; infl, Inflammatory Arthropathy / Chemical; Chr Pain, Chronic Pain syndrome; Struc Com, Structurally Compromised; Trauma, Trauma/ Recovering Trauma; Ser Path, Serious Pathology; Post Surg, Post Surgery; CI, confidence interval; NA, not applicableTable 4 shows the paired comparison of reliability and agreement in patient assessment forms among the six raters. κ values ranged from 0.83 to 0.89 and agreement values from 83% to 89%.

Chr Pain, Chronic Pain syndrome; CI, confidence interval; Der, Derangement syndrome; Dys, Dysfunction syndrome; infl, Inflammatory Arthropathy / Chemical; MI, Mechanically Inconclusive; MUR, Mechanically Unresponsive Radicular syndrome; NA, not applicable; Post, Posture syndrome; Post Surg, Post Surgery; Ser Path, Serious Pathology; SIJ, sacroiliac joint pain; Struc Com, Structurally Compromised; Trauma, Trauma/ Recovering Trauma

DiscussionThis is the first study that assessed the inter-rater reliability of the McKenzie Method of MDT in the examination of spinal pain that has encompassed all the individual OTHER classifications. This study found almost perfect inter-rater reliability between the judgements of six raters using patient assessment forms. Additionally, high κ values were found between each pair of raters, indicating that there was also almost perfect agreement between each pair of raters.

The results of the current study are in concordance with the conclusions of the review of Garcia et al.32 They concluded that the MDT system demonstrates acceptable inter-rater reliability when classifying patients with back pain into main syndromes by therapists who have completed the credentialing examination. Werneke et al. reported that lumbar classification among therapists with pre-credentialed level of training only had fair to moderate reliability (κ = 0.37 to 0.44), despite high observed agreement (86–91%).33 However, it has been proposed that this paradox may be due to the sensitivity of the kappa statistic to skewed prevalence of ratings, and that drawing conclusions based solely on the kappa statistic may be misleading.28,34,35 The high prevalence of Derangement (81–86%) and the relatively low prevalence of other classifications (0–4.6%) in this study likely contribute to the low kappa values, despite the high observed agreement.28,29,33-36 In the current study the prevalence rates of all the classifications were much less skewed and therefore observed agreement and κ values are in balance.

The highest level of reliability was observed for Derangement syndrome (κ = 0.94), while the lowest level of reliability was found for Spinal Stenosis (κ = 0.58). The difference in reliability between these diagnostic classifications could be attributed to their respective clinical presentations. Derangement syndrome is characterized by a sustained reduction or elimination of symptoms through repetitive or sustained loading strategies in a specific direction, making it easily identifiable.3,10,37 On the other hand, the lower level of agreement for Spinal Stenosis could be due to the overlapping aspects in the operational definitions with MUR. The rest of the OTHER classifications achieved substantial to almost perfect levels of reliability, indicating that the operational definitions for different OTHER classifications are well-described and recognized during initial visits. (See supplementary material 1 and 2, from Part A Course Manual, McKenzie Institute International ©)

One of the OTHER classifications is Serious Pathology. Any presentation of Serious Pathology is normally identified by the medical doctor (MSK physician or neurologist) who initially screens the patients, being the first in line during the intake procedure in Rugpoli, and therefore this classification was not seen by the creators of the patient assessment forms and raters of this study.

Strengths and limitationsTo strengthen the study, we used assessment forms of real patients and a thorough power calculation resulting in 150 patients rated by six reviewers. One of the limitations was that raters where highly trained and experienced in utilising the MDT Method in a clinical setting where prevalence rates of OTHER classifications may differ substantially from primary care. This makes these data less generalisable to other, less trained physical therapist or those working in a clinical setting where the prevalence rates for OTHER classifications are low. Also, using written patient assessment forms eliminates the potential error created by a change in the patient presentations between raters but may not represent the full clinical picture as seen in practice, where nonverbal communication possibly influences decisions in testing and clinical reasoning. This can make classification easier and potentially has a positive impact on kappa values. Further research with real patients still needs to be done to establish full reliability.

ConclusionThis is the first study to our knowledge that assessed the inter-rater reliability of the McKenzie Method of MDT in the examination of spinal pain that has encompassed all the individual OTHER classifications. This study found almost perfect inter-rater reliability between the judgements of six raters using patient assessment forms. The level of reliability for individual classifications varied between substantial to almost perfect. Additionally, almost perfect inter-rater reliability values were found between each pair of raters, indicating that there was also almost perfect agreement between each pair of raters

The researchers declare no competing interest.

Henk Tempelman, Hanneke Meihuizen, Daniel Poynter, Peter Hagel, Marijke Mol, Loes Geerlings, for creating and rating patient assessment forms.